[100-Day AI bootcamp] Day 8: Financial Data Assistant

![[100-Day AI bootcamp] Day 8: Financial Data Assistant](https://growgrow.s3.us-east-2.amazonaws.com/media/blog_images/5321738902254_.pic.jpg)

https://github.com/xkuang/financial-data-assistant

Development Tools:

- Code Development: Cursor - The AI-first code editor

- AI Assistance: Claude (Anthropic) - For rapid development and problem-solving

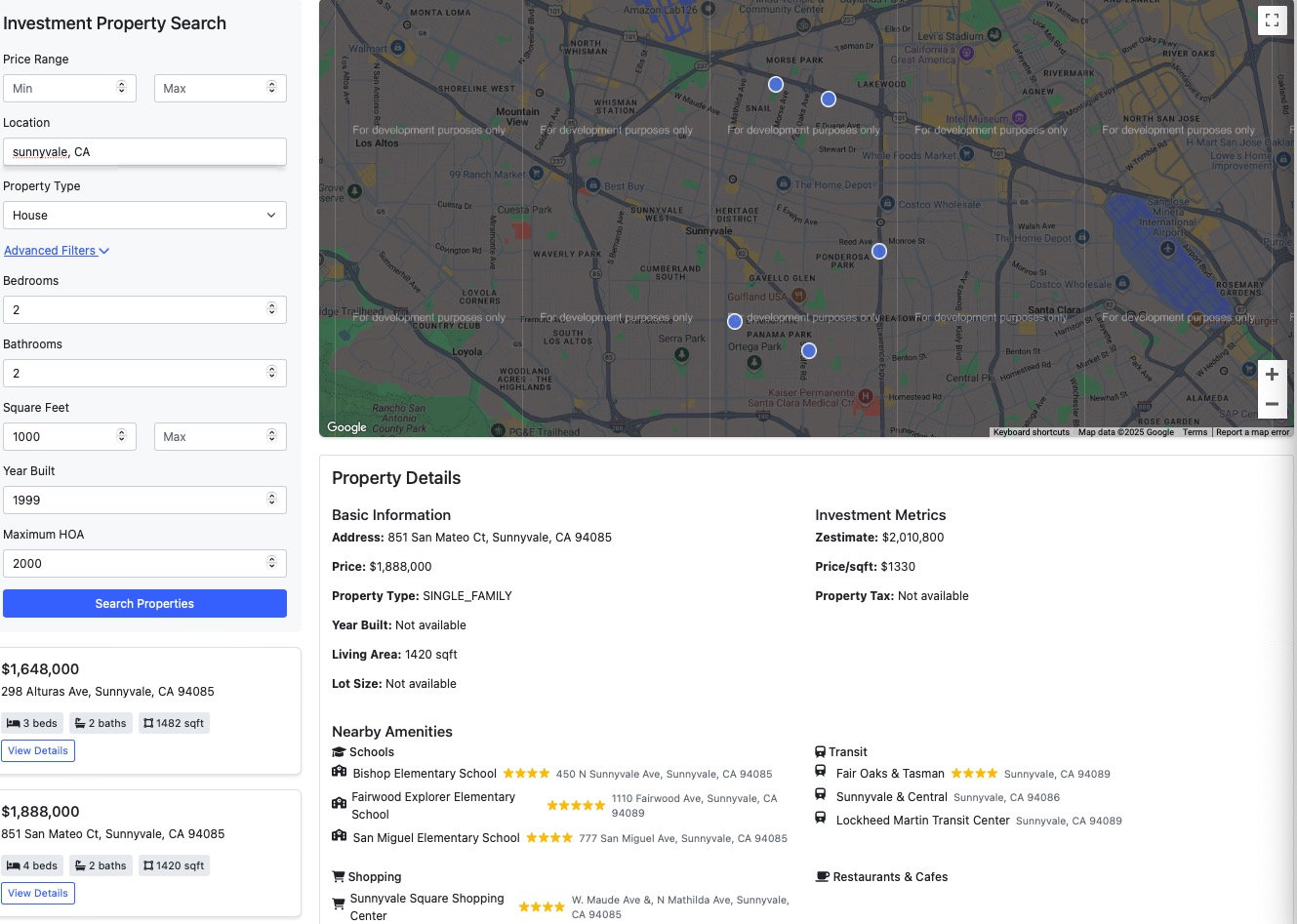

A comprehensive financial data platform that aggregates and analyzes data from FDIC (Federal Deposit Insurance Corporation) and NCUA (National Credit Union Administration). The platform includes an automated data pipeline using Apache Airflow and a web interface with SQL querying capabilities.

Features

- Automated data collection from FDIC and NCUA sources

- Daily data freshness checks and updates

- Interactive SQL query interface

- Pre-built analytics queries

- AI-powered chat interface for data exploration

- Comprehensive documentation and API reference

System Requirements

- Python 3.11+

- PostgreSQL 13+

- Redis (for Airflow)

- Virtual environment management tool (venv recommended)

Directory Structure

.

├── airflow/

│ ├── dags/

│ │ ├── refresh_financial_data_dag.py

│ │ ├── fdic_ingestion.py

│ │ └── ncua_ingestion.py

├── docs/

│ ├── architecture.md

│ ├── data_mechanics.md

│ └── technical_overview.md

├── prospects/

│ ├── models.py

│ ├── views.py

│ └── urls.py

├── templates/

│ ├── base.html

│ ├── chat/

│ └── documentation/

└── manage.py

Installation

- Clone the repository:

git clone https://github.com/yourusername/financial-data-assistant.git cd financial-data-assistant

- Create and activate a virtual environment:

python -m venv venv source venv/bin/activate # On Windows: venv\Scripts\activate

- Install required packages:

pip install -r requirements.txt

- Set up environment variables (create .env file):

# Django settings DEBUG=True SECRET_KEY=your_secret_key_here ALLOWED_HOSTS=localhost,127.0.0.1 # Database settings DATABASE_URL=postgresql://user:password@localhost:5432/financial_db # Airflow settings AIRFLOW_HOME=/path/to/your/airflow AIRFLOW__CORE__SQL_ALCHEMY_CONN=sqlite:////path/to/your/airflow/airflow.db AIRFLOW__CORE__LOAD_EXAMPLES=False

- Initialize the database:

python manage.py migrate python manage.py createsuperuser

Running the Application

1. Start Airflow Services

Initialize Airflow database (first time only):

airflow db init

Create Airflow user (first time only):

airflow users create \

--username admin \

--firstname Admin \

--lastname User \

--role Admin \

--email admin@example.com \

--password admin

Start Airflow services:

# Start the web server (in a separate terminal) airflow webserver -p 8081 # Start the scheduler (in another separate terminal) airflow scheduler

Airflow UI will be available at: http://localhost:8081

2. Start Django Development Server

python manage.py runserver 8000

The web application will be available at: http://localhost:8000

Available URLs

- Main Application: http://localhost:8000

- Documentation: http://localhost:8000/docs/

- Chat Interface: http://localhost:8000/chat/

- Admin Interface: http://localhost:8000/admin/

- Airflow Interface: http://localhost:8081

Data Pipeline

The Airflow DAG (refresh_financial_data) runs daily at 6 AM and performs the following tasks:

- Checks FDIC website for new data

- Checks NCUA website for new data

- Generates a freshness report

- Updates database if new data is available

Development

Running Tests

python manage.py test

API Documentation

The application provides several API endpoints for data access:

/api/institutions/: List of financial institutions/api/stats/: Quarterly statistics/chat/query/: Natural language query endpoint/chat/sql/: SQL query endpoint

Acknowledgments

- FDIC for providing financial institution data

- NCUA for providing credit union data

- Apache Airflow team for the amazing workflow management platform

- Django team for the robust web framework

Learning Resources

Interview Preparation Courses

Looking to level up your interview skills? Check out these comprehensive courses:

-

Grokking the Modern System Design Interview - The ultimate guide developed by Meta & Google engineers. Master distributed system fundamentals and practice with real-world interview questions. (26 hours, Intermediate)

-

Grokking the Coding Interview Patterns - Master 24 essential coding patterns to solve thousands of LeetCode-style questions. Created by FAANG engineers. (85 hours, Intermediate)

-

Grokking the Low Level Design Interview Using OOD Principles - A battle-tested guide to Object Oriented Design Interviews, developed by FAANG engineers. Master OOD fundamentals with practical examples.

Essential Reading

- Designing Data-Intensive Applications by Martin Kleppmann - The definitive guide to building reliable, scalable, and maintainable systems. A must-read for understanding the principles behind modern data systems.

Hosting Solutions

For deploying your own instance of this project, consider these reliable hosting options:

- DigitalOcean - Simple and robust cloud infrastructure

- DigitalOcean Managed Databases - Fully managed PostgreSQL databases

![[100-Day AI bootcamp] Day 32: Flashcard Generator](https://growgrow.s3.us-east-2.amazonaws.com/media/blog_images/4421737105713_.pic.jpg)

![[100-Day AI bootcamp] Day 9: AI Resume Builder](https://growgrow.s3.us-east-2.amazonaws.com/media/blog_images/4231736341062_.pic.jpg)

![[100-Day AI bootcamp] Day 8: AI generated Children book web app](https://growgrow.s3.us-east-2.amazonaws.com/media/blog_images/4221736329152_.pic.jpg)

![[100-Day AI bootcamp] Day 7: Short Text social media App](https://growgrow.s3.us-east-2.amazonaws.com/media/blog_images/4211736248200_.pic.jpg)

![[100-Day AI bootcamp] Day 2: Building a Real-Time Chatroom with Django](https://growgrow.s3.us-east-2.amazonaws.com/media/blog_images/4201736241999_.pic.jpg)

![[100-Day AI bootcamp] Day 1: AI-Powered Blog](https://growgrow.s3.us-east-2.amazonaws.com/media/blog_images/4161736157861_.pic.jpg)

![[100-Day AI bootcamp] Day 22: Movie Recommendation and Sentiment Analysis Web App](https://growgrow.s3.us-east-2.amazonaws.com/media/blog_images/4151736154176_.pic.jpg)

![[100-Day AI bootcamp] Day 12: Personal Diary App](https://growgrow.s3.us-east-2.amazonaws.com/media/blog_images/4571735986511_.pic.jpg)

Comments

Please log in to leave a comment.

No comments yet.